Sony has filed with the USPTO a feature for smart glasses that helps find lost objects via time-stamped images. The Japanese conglomerate’s patent application is aimed at saving people a surprising amount of time and money.

Americans spend 2.5 days a year looking for misplaced items, and approximately $2.7 billion replacing lost objects. Sony cited these estimates in its filing to highlight how common and how costly forgetfulness can be. From well-documented causes like age and stress to more novel ones like the “Doorway Effect”, there are countless situations where object-finding technology may shine, and it could be a promising yet neglected device that brings it to the limelight.

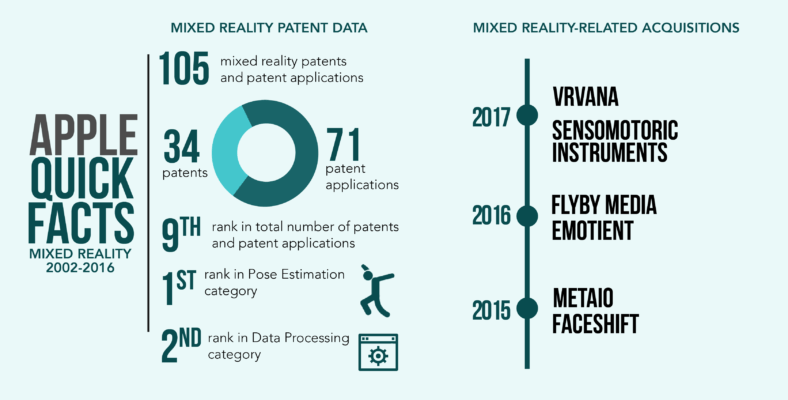

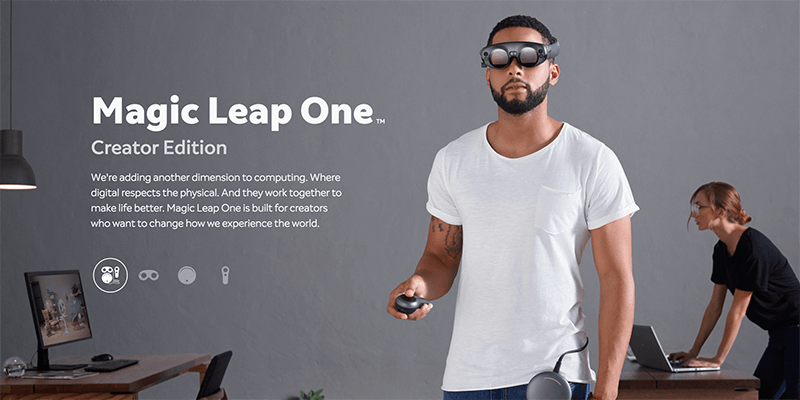

Smart glasses were the shiniest piece of consumer technology in the early 2010s, especially around the launch of Google Glass. With functionality that simply could not justify its price, Google’s smart eyewear went from overnight media darling to yet another slow-burn Silicon Valley project. And the passion for smart glasses has indeed kept silently burning. Sony, with its own failed attempt in Smart EyeGlass, appears adamant about a smart eyewear revolution, as evidenced by its continued production of intellectual property related to such fledgling devices.

To help people locate misplaced objects, Sony’s invention employs artificial intelligence, and lots of pictures.

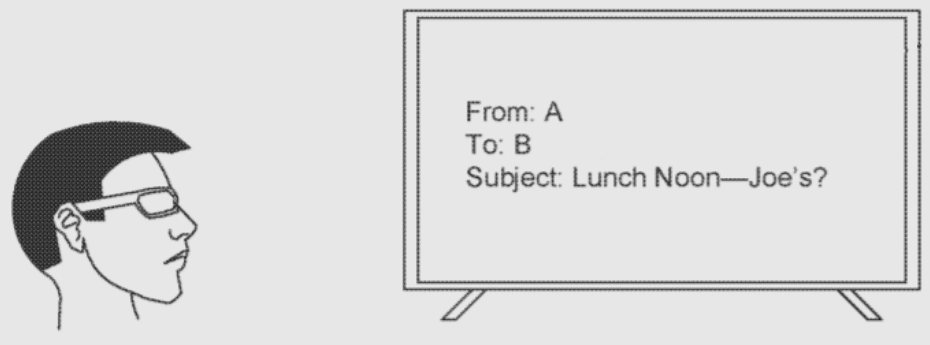

Fig. 1. Illustration of a use-case example in which smart glasses capture images of text on a computer display.

The feature may have an always-on nature, with smart glasses capturing images throughout their wearer’s day, and perhaps time-stamping each. The photos would be stored in a medium unspecified by the patent application, but possibly on a form of physical memory like a solid-state drive or virtually in cloud storage. The images are processed through artificial intelligence or machine learning techniques, which identify objects, text, and any actions done to either one.

Sony presents an example scenario wherein a green cup is stored in a closet. The system creates a map that associates the image of the green cup with multiple objects around it. The timeline before and after the green cup was last imaged is also noted.

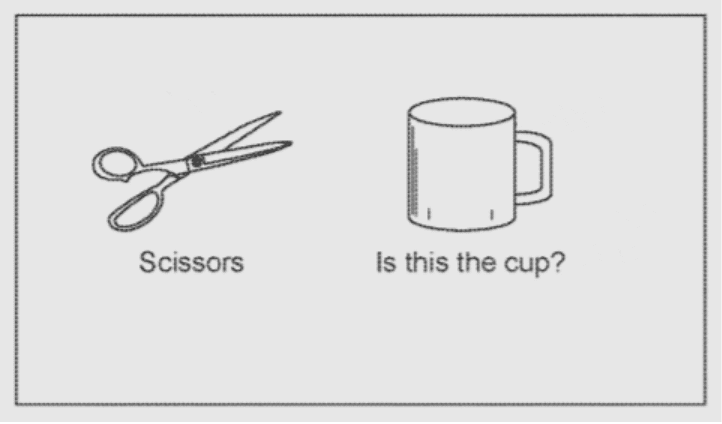

Should the user seek to locate the green cup at some point in the future, they may simply say “green cup” and the smart glasses would visualize multiple objects associated with the item.

If there would be a situation where two green cups were photographed at different locations, the system would refer to the detected environment and timestamps.

Sony says presenting objects associated with the queried lost item could help point users to the right place, especially when no location or time of loss is known. Associations are made with objects detected within proximity of the misplaced item, either physically or temporally.

Fig. 2. Illustration of an example screenshot consistent with the present invention. A prompt may be presented as to whether the image is of the desired object, and if the user answers “no,” another image is presented.

To help improve the smart glasses’ ability to identify objects, they may be configured to additionally track the user’s gaze. Eye-tracking could better inform the system of particular objects of interest, since images taken throughout the day may follow a smart glass wearer’s focus.

The featured patent application, “Smart Glasses Lost Object Assistance”, was filed with the USPTO on March 10, 2020 and published thereafter on September 16, 2021. The listed applicant is Sony Corporation. The listed inventor is Bibhudendu Mohapatra.