The iconic Las Vegas Sphere is getting a second venue.

Sphere Entertainment and Abu Dhabi’s Department of Culture and Tourism have announced plans to bring the iconic venue to the UAE capital, following the $2.3 billion debut in Las Vegas in September 2023. The second location promises to match the original’s 20,000 seating capacity. Under the agreement, DCT Abu Dhabi will finance the construction, paying Sphere a franchise fee for the right to build the venue, use its designs, technology, and intellectual property. Last October, Sphere registered three trademarks in the UAE, Qatar and Oman relating to “Exosphere”.

In our 2023 Las Vegas Sphere article, we explored the patenting activity of MSG Entertainment, now called Sphere Entertainment, the company behind this iconic venue. We uncovered some of the foundational technologies driving its groundbreaking capabilities, which earned it a spot in TIME’s Best Inventions of 2023 list. From expansive 16K LED displays and advanced audio systems to immersive atmospheric effects and tailored experiences, the article showcased how intellectual property underpins this remarkable fusion of architecture and cutting-edge technology.

Sphere Patent Activity

Sphere’s patent portfolio has grown significantly since last year, from around 70 to over 100 patents and applications across 40+ unique families. Filings have remained consistently high, with 2024 seeing the most publications and grants so far. More applications are expected under the standard 18-month publication rule.

The company’s EPO filings, in particular, also saw an increase, likely in connection with previously reported plans for a new Sphere venue in the U.K., albeit officially withdrawn earlier this year.

Earlier this year as well, Sphere completed its acquisition of HOLOPLOT, a Berlin-based audio technology company. We explored its beamforming and wave field synthesis technology which integrated into the Sphere Immersive Sound system. The acquisition also granted Sphere ownership of HOLOPLOT’s patents and other intellectual property rights.

As of this writing, Sphere has a total of 118 patent publications: 48 are issued patents, 56 are pending applications, and 14 have lapsed. Notably, Sphere was also awarded the patent related to its integrated audiovisual system (KR102713539B1) in South Korea just last month, which aligns with the company’s reported plans of building a “K-pop Sphere” in the country beginning 2025. The company also secured a similar patent in Japan and a virtual camera-related patent in China, both issued this year as well.

Sphere patents filed per jurisdiction

| Jurisdiction | Lapsed | Pending | Granted | Total |

|---|---|---|---|---|

| US | 2 | 31 | 45 | 78 |

| EPO | 11 | 16 | 0 | 27 |

| South Korea | 0 | 2 | 1 | 3 |

| Japan | 0 | 2 | 1 | 3 |

| China | 0 | 2 | 1 | 3 |

| Canada | 0 | 2 | 0 | 2 |

| Australia | 1 | 1 | 0 | 2 |

Featured Sphere Patents

Beyond the foundational patents that underpin the design and technological capabilities of the iconic venue, innovators at Sphere have also been patenting a range of groundbreaking technologies that could form the basis for their envisioned future of entertainment. Below, we explore some of these innovations.

Sterne, Kessler, Goldstein & Fox is the listed firm that secured the feature patents for Sphere Entertainment.

Event simulator and collaboration in a VR environment

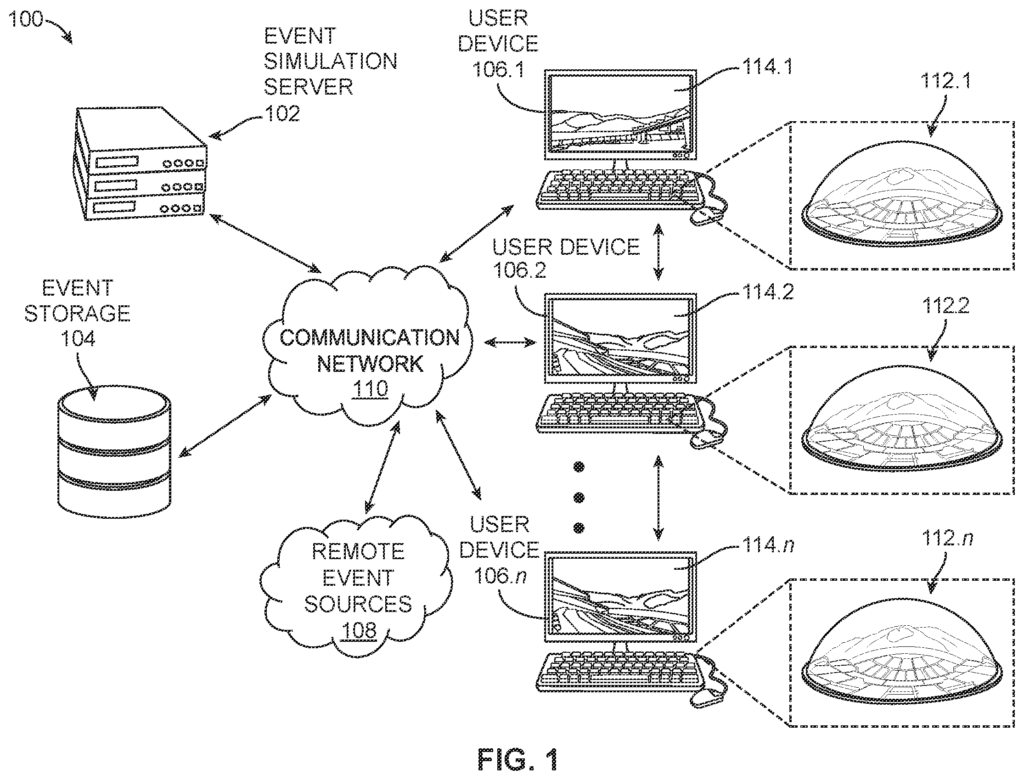

Sphere Studios has been developing innovative tools to enhance audience immersion and interaction. As the ‘141 patent explores multi-user performer interactions, the ‘301 patent focuses on event simulation for multi-user collaboration within a VR environment. The ‘301 patent addresses limitations in traditional event planning by enabling remote simulation and optimization of live events through AR/VR technologies.

Designing events for venues with unique layouts and technical systems often demands costly, time-consuming site visits. Traditional tools also lack the real-time interactivity needed for effective collaboration, especially among dispersed teams, making it challenging to customize content or explore creative modifications—particularly for a venue as distinctive as Sphere.

U.S. Patent No. 12,086,301 resolves these challenges by mapping digital representation of real-world events onto virtual venue models. Using AR/VR headsets, mobile devices, or metaverse technologies, participants can navigate the venue, view events from various perspectives, and collaboratively adjust lighting, sound, and effects in real time. Changes are synchronized for all users, and the system can simulate sensory effects like wind, scent, and temperature, enhancing creativity and logistical planning.

Meanwhile, performers could rehearse their choreography virtually, ensuring seamless alignment with props, visual effects, and stage layouts prepared for the real-world venue. This approach not only redefines how events can be planned at Sphere but also opens the door for artists to create highly interactive, immersive live experiences that blend physical and virtual elements in unprecedented ways.

The ‘301 Patent, entitled “System for multi-user collaboration within a virtual reality environment,” was filed on July 8, 2022 and was issued on September 10, 2024. The patent lists the following inventors: Vlad Spears, Stephen Hitchcock, Emily Saunders, Sasha Brenman, Michael Romaszewicz, Michael Rankin, and Ciera Jones.

Voxel-based heatmaps for virtual object placement

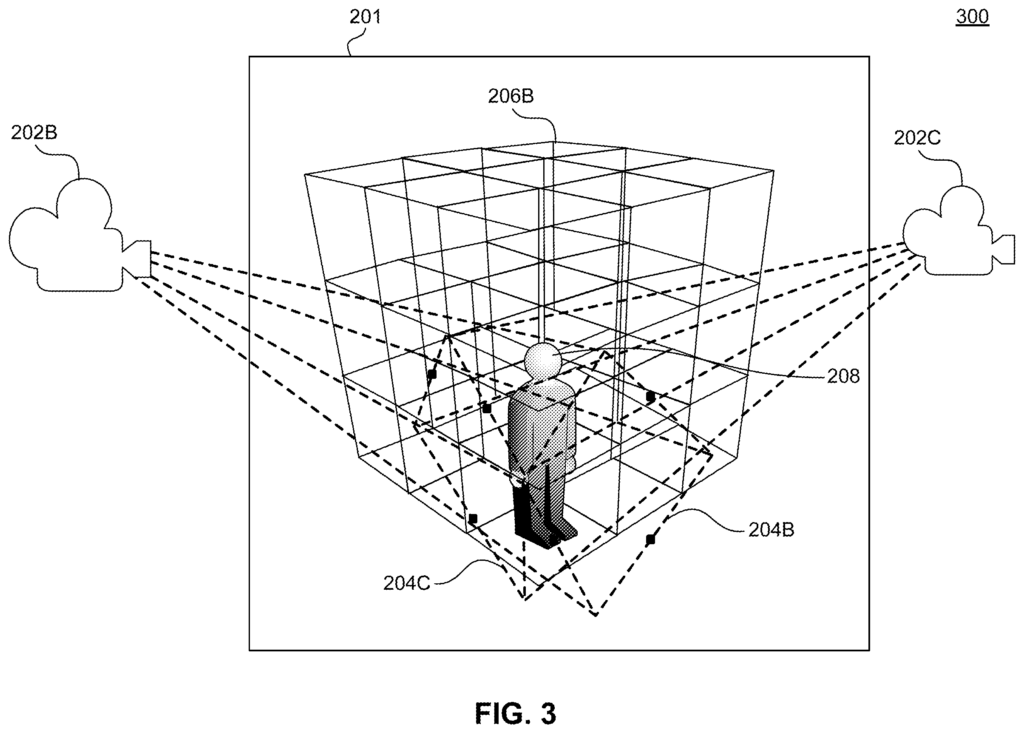

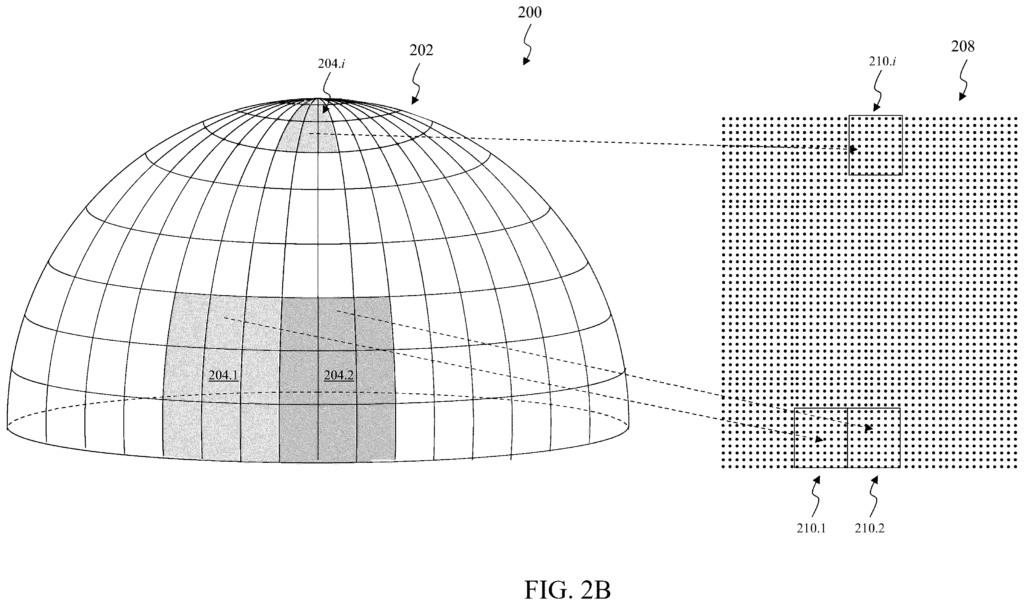

Innovators at Sphere are keen on the transformative potential of metaverse technologies to deliver experiences in 3D virtual environments. However, traditional methods for placing virtual objects in these environments often struggle in complex scenarios, especially those involving multiple virtual cameras or limited viewer interactivity. Legacy systems rely heavily on manual placement or basic goal-oriented intelligence, which fall short in optimizing object visibility across overlapping fields of view and offering the nuanced creative control essential for compelling storytelling.

These shortcomings disrupt the seamless integration of virtual elements, reducing the engagement and impact of interactive experiences.

At Sphere, immersive audiovisual experiences rely on multiple screens that display overlapping yet unique perspectives of vibrant 3D virtual environments. The voxel-based heat map technology described in U.S. Patent No. 12,094,074 helps designers position virtual objects with precision and adaptability, ensuring these elements enhance the visual story from any viewing angle. For example, a virtual waterfall could be placed so that it prominently features in the central field of view for most attendees while subtle reflective elements add depth to peripheral screens. Similarly, a digital performer might seamlessly “move” across different screens, maintaining a synchronized presence while offering exclusive moments to specific sections of the audience.

Real-time responsiveness

This technology also enables real-time responsiveness, allowing digital characters or visual elements to react dynamically to audience movements or environmental cues. Whether it’s a virtual sculpture shifting to engage viewers or interactive light displays adjusting based on crowd behavior, the patent’s innovative framework supports richly layered, interactive storytelling experiences.

The ‘074 introduces an advanced method for intelligently guiding virtual object placement within 3D virtual spaces. By partitioning the environment into voxels and assigning weights based on virtual cameras’ fields of view, it creates a dynamic heat map that tracks visibility and interaction patterns. This allows designers to align object placement with creative goals, such as maximizing visibility or strategically concealing elements. Supporting multiple virtual cameras and customizable parameters, this scalable approach enhances storytelling, usability, and the immersive potential of Sphere’s virtual environments.

The ‘074 Patent, entitled “Volumetric heat maps for intelligently distributing virtual objects of interest,” was filed on July 1, 2022 and was issued on September 17, 2024. The patent lists Stephen Hitchcock and Emily Saunders as inventors.

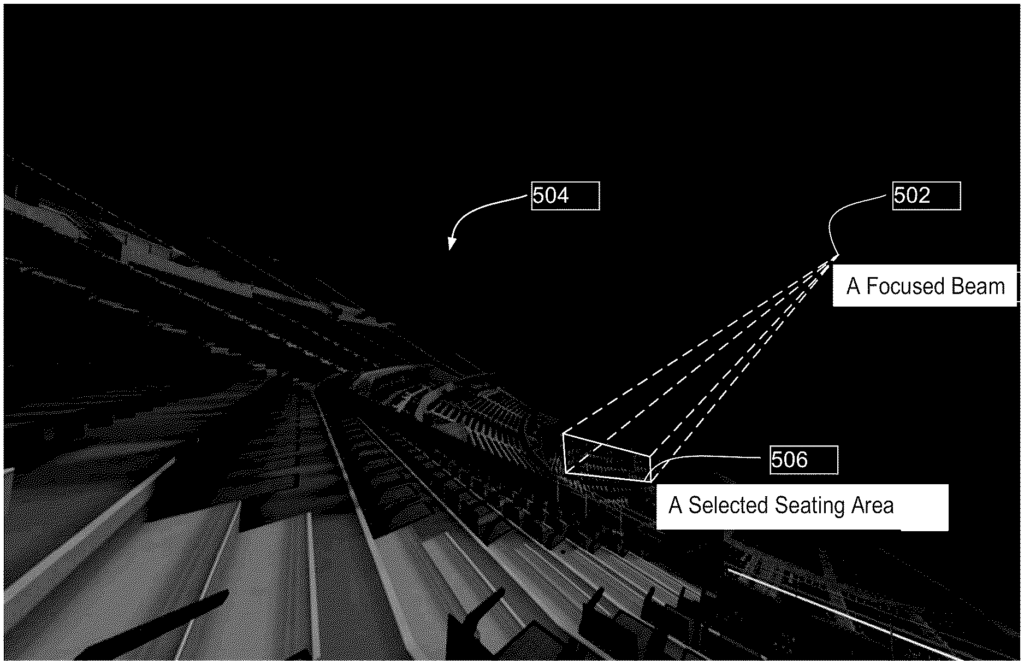

“Seeing” sound

Stunning visuals need an equally immersive audio experience. At Sphere, where the architecture demands precision, traditional spatial audio tools fall short. Configuring directional sound for large venues is often slow and inefficient, relying on auditory cues alone. Most tools lack visual aids for real-time tuning, making it hard to map sound coverage or adapt studio setups to live spaces.

U.S. Patent No. 11,956,624 provides a solution, describing systems and methods for light-based spatial audio metering, for example, using extended reality (XR) devices, such as AR/VR headsets and controllers. The technology visually maps sound coverage patterns onto a virtual model of the venue by using volumetric light beams that align with audio outputs and encode specific audio characteristics such as spectral range, temporal dynamics, and spatial directivity.

Navigating the virtual venue

These light beams simulate audio properties (e.g., direction, shape, and coverage area), allowing users to interactively navigate the virtual venue, view sound from different perspectives, and make real-time adjustments to parameters like loudness, frequency spectrum, and spatial coverage. This enables efficient audio calibration and enhances studio-to-venue translation, reducing logistical challenges and providing more precise audio configurations for various types of events.

Reimagining live performances

The technology reimagines how interactive live performances are planned and executed. For example, sound engineers and production teams at Sphere could design an exhibition or prepare for a live concert using VR headsets or HUDs. As they step into the metaverse, they access a virtual representation of the venue where they can “see” sound. Light beams visualize the direction, coverage, and intensity of each audio channel, mapping the interaction of sound with the venue’s unique acoustics and seating arrangement.

XR-based visualization

Sphere’s unique geometry amplifies the need for precise sound distribution. The ‘624 patent’s XR-based visualization ensures that sound can reach every corner of the venue as intended, regardless of seating section complexity. In scenarios such as a live concert or other audiovisual showcases, engineers can anticipate and address potential acoustic overlaps or dead zones before the event begins, ensuring consistent auditory experiences. Furthermore, the capability to visualize rhythmic variations in source content, such as transitions between bass-heavy segments and melodic sections, empowers artists and engineers to craft dynamic performances that resonate with audiences visually and aurally.

The ‘624 Patent, entitled “Light-based spatial audio metering,” was filed on July 11, 2022 and was issued on April 9, 2024. Yuan-Yi Fan and Neil Wakefield are listed as inventors.

Addressing visual challenges

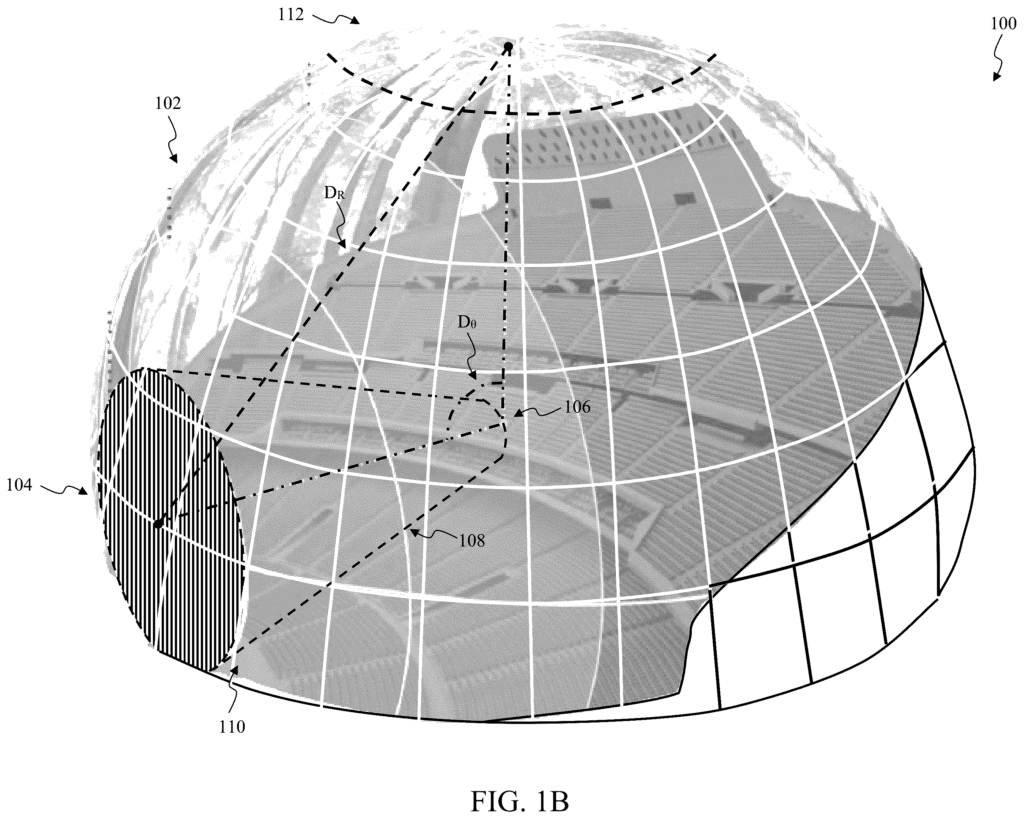

Sphere’s geometry, while an architectural marvel, presents unique challenges in delivering seamless, visually engaging experiences across its expansive curved media surface. Achieving undistorted content that resonates with a diverse audience requires advanced computational techniques and calibration. Conventional methods of capturing and projecting images onto three-dimensional media planes, such as using ultrawide-angle fisheye lenses, often produce high-resolution imagery only at the “prime viewing section” (typically the top of the display). However, these methods compromise image quality at the “springing” or lower sections – key areas where performers and audience focus are concentrated. This disparity forces viewers to shift their gaze upward, disrupting immersion and reducing engagement.

The proposed systems outlined in three pending applications (U.S. Pat. App. Pub. No. 2024/0214676, U.S. Pat. App. Pub. No. 2024/0214535, and U.S. Pat. App. Pub. No. 2024/0214690 ) address these challenges with advanced image capture, storage, and projection technologies. By utilizing a camera lens system capable of non-uniformly focusing light onto the image sensor, the system enhances optical quality at the sensor’s periphery. This allows designers to prioritize higher resolution for lower sections of the media plane, where audience focus is critical.

The system uses advanced mathematical techniques, including kernel-based sampling and functions (e.g. Ackley, Himmelblau, Rastrigin, Rosenbrock, and Shekel) to map 2D pixel coordinates onto a curved 3D media plane. This process interpolates color information for seamless projection. Additionally, intelligent redundant storage optimizes computational and memory resources by focusing on key image sections, especially those in the audience’s line of sight. This approach enhances the delivery of diverse visual experiences at Sphere, ensuring high-quality visuals from various angles and positions, particularly in critical performance areas where audience attention is directed.

Enhanced viewer experience

During live events at Sphere, performers are typically positioned near the “springing” of the venue’s curved media plane, engaging with an audience seated around the “haunch” (i.e., halfway between the top and the base). Traditional recording and projection systems often fail to capture high-quality visuals from and in this area, resulting in blurry or distorted images of the performers. However, the solutions outlined in the patent filings allow for high-resolution imagery to be focused on the lower sections of the media plane, ensuring that every audience member enjoys sharp, vibrant visuals without awkward viewing angles. This technology maintains clarity and enhances various visual effects, perfectly aligning with Sphere’s curved display to create an immersive experience that captivates all attendees.

U.S. Pat. App. Pub. No. 2024/0214676, entitled “Capturing images for a spherical venue”; U.S. Pat. App. Pub. No. 2024/0214535, entitled “Projecting images on a spherical venue”; and U.S. Pat. App. Pub. No. 2024/0214690, entitled “Redundant storage of image data in an image recording system,” were all published on June 27, 2024. Among the listed inventors are: Deanan Dasilva, Michael Graae, and Andrew Cochrane.

Sphere, with its iconic design and immersive audiovisuals is proving to be more than an entertainment marvel. This unique venue offers engineers and artists an unparalleled platform to create novel experiences that leverage its technological potential. From immersive virtual reality (VR) experiences and large esports gaming tournaments to AI-driven live events and interactive performances, Sphere has the potential to become a testbed for the entertainment of the future.