The development of the first augmented reality (AR) device was credited to Ivan Sutherland’s team at the University of Utah back in the 1960s. The working prototype (called the Sword of Damocles) is a headgear that uses two small cathode ray display devices as the eye pieces while a tube-like device is attached to the top of the head to help in measuring the head position of the user. This head gear is connected by a cable to a clunky computer called PDP-1.

These days, we see AR technology in the form of lightweight gaming headsets and smart glasses that possess such capabilities. In a recent press release, Apple has unveiled their battery-operated AR capable headset in goggle-like form. Apple’s Vision Pro is equipped with cameras and sensors to gather information about the environment and hand gestures. In contrast with its predecessor technology, this AR headset uses micro-OLED displays for the eye pieces and an R1 chip for processing gathered information.

Additionally, popular games like Pokemon Go have showcased the seamless integration of AR technology into conventional smartphones thereby enhancing convenience for users.

From its origin as a university project, AR has truly made significant progress. In this article, we will look into AR and its various applications: in vehicle and evacuation systems, and extended reality (XR).

General Motors| Augmented reality head-up display for audio event awareness

Americans with hearing impairments are protected by The American with Disabilities Act (ADA) from depriving them of their right to drive a vehicle. Moreover, the Department of Motor Vehicles (DMV) typically does not screen applicants based on the ability to hear clearly. While the ability to drive relies more on visual cues, being able to hear and identify audio signals such as sirens, horns or approaching vehicles can lessen the risk for vehicular accidents while driving.

This patent aims to convert auditory signals into visual indications, and it achieves this by collecting the sounds with an array of microphones that are positioned on a vehicle. To determine various aspects such as sound location and motion direction, supplementary sensors record data in conjunction. Subsequently, these audio signals alongside their associated contextual information are processed through a machine learning algorithm to classify and identify the sounds accurately. Additionally, there is a repository of images available to portray each distinct sound distinctly. The augmented interface then displays the graphical representation directly on the car’s windshield for convenient viewing by drivers or passengers alike.

If implemented on a vehicle, this invention can significantly enhance the driving safety for individuals with hearing disabilities, as it empowers them to perceive auditory prompts that would have otherwise been unfeasible without the support of augmented reality technology.

US 11,645,038 was filed on May 31, 2022 and granted on May 9, 2023 to GM Global Technology Operations LLC, a subsidiary of General Motors.

National Disaster Management Research Institute | Method and System for Evacuation Route Guidance of Occupants Using Augmented Reality of Mobile Devices

In highly urbanized areas, medium- and large-scale buildings are common structures lining the streets of the area. With the acceleration of industrialization, complex design elements are incorporated into these structures which makes it difficult for people to navigate through them. A consequence of this complexity is the added challenge of evacuating people from these building during disasters.

This patent application proposes an approach to evacuate individuals from a structure by leveraging an app installed on a mobile device. To accomplish this objective, the aforementioned software communicates with an off-site server that provides comprehensive visualization of evacuation routes integrated atop a digital map. Additionally, contemporaneous photographs captured through the built-in camera in users’ devices contribute accurate real-time depictions of surroundings that facilitate speedy decision-making and safer evacuations. The program also includes supplementary guidance embedded onto visual aids to further refine swift exit strategies under duress.

The concept of utilizing a mobile application for evacuation support is not really new. However, this particular app distinguishes itself from analogous innovations by its ability to customize the evacuation course that aligns with the nature of calamity and individual preferences of users. Furthermore, based on newly generated information at the server, adjustments can be made to the suggested routes as well. Additionally, crucial materials such as fire extinguishers can be detected through image recognition technology incorporated in the app which gives directions about their usage. The mode of operation can also take into account the battery life, with a recalculation of how long the battery will last based on the user’s speed upon following the evacuation route. To intelligently estimate battery life during an emergency situation becomes part of operation mode, where it also accounts user’s speed while following suggested escape paths thereby recalculating how much time battery could last before discharge occurs.

In general, the invention described in a patent filing constitutes an advantageous tool during critical situations. Not only does it offer essential assistance for safely exiting a structure, but it can also contribute to pacifying occupants amidst disarray and turmoil.

US 2023/0145066 was filed on November 30, 2021 by the National Disaster Management Research Institute of South Korea.

Splunk | Data visualization in an extended reality environment

A common scene in sci-fi movies depicting futuristic technology is a character accessing digital data without the use of a computer screen. Instead, what we see is a protagonist staring at a holographic image of a computer file that can be manipulated in the air through a swipe of a hand or some similar gesture. The main character may wear a VR headset, sometimes not. Either way, the hero of the story can open files, view them then close them through hand gestures.

This patent may not be as exciting a technology as the scenes in sci-fi movies, but the concept of the invention is the same. In this patent, an extended reality (XR) environment takes the place of a computer screen thereby providing the advantage of an unbounded viewing experience. Digital data for viewing are represented by dashboard panels that are distributed across the XR environment. Some examples of digital data can be process performance metrics, blue screen crashes, etc. which can be visualized in the form of charts or graphs. Similar to fictional movie scenarios, a user can interact with the XR elements to view the desired data. While this patent emphasizes XR environment, this invention can also use AR environment so that the dashboard items look more like items floating in front of the user.

US 11,644,940 was filed on January 31, 2019 and granted on May 9, 2023 to SPLUNK Inc.

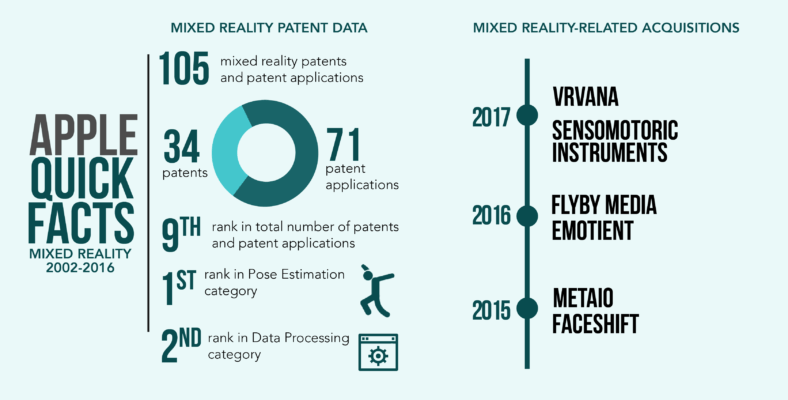

More about augmented reality in our Mixed Reality Patent Landscape Report.