Researchers from the Korea Institute of Science and Technology (KIST) are looking to patent a system related to controlling avatars in real time within a virtual reality (VR) environment using a person’s biofeedback.

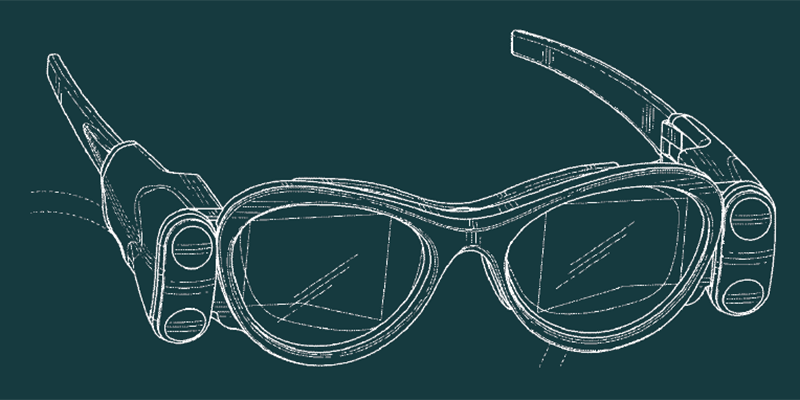

The system primarily aims to understand the user’s intentions by measuring and analyzing certain biosignals, like electrical activity from the brain, muscles, or eyes. It uses a brain–computer interface (BCI) to measure those biosignals and generate corresponding control signals for the user’s virtual avatar.

Such systems have been extensively studied and explored before in a variety of applications, including cognition-related researches, motor rehabilitation, and control of various electronic devices, such as electric wheelchairs and even exoskeleton robots.

One particular application of the technology is providing patients gradual improvement in cognition and motor functions through suitable virtual training programs. VR extends the visual and physical limitations of the user, allowing a unique approach to motor rehabilitation for patients who may have suffered paralysis or other neurological impairments.

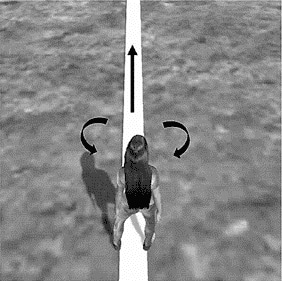

The invention is aimed at delivering possible long-term improvement to patients’ cognitive and motor functions. In one example, a program would train users to achieve target biosignals like brainwave states, eye movements, and muscle contractions/relaxations.

The system may also have a virtual avatar’s motions serve as real-time feedback for users attempting specific biosignals. It may then evaluate and score their accuracy and other aspects of the avatar’s movement, thus providing another layer of feedback to the user. This may hopefully induce greater motivation for a patient’s rehabilitation.

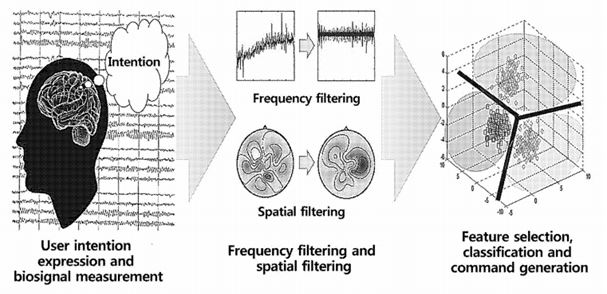

The KIST researchers’ invention particularly measures the electroencephalography (EEG), electromyogram (EMG), and/or electrooculogram (EOG) signals of the user using sensors (e.g. electrode sensors). The signals are processed and analyzed using a variety of techniques, including frequency filtering, spatial filtering, feature selection, and classification, to generate control signals needed to command the virtual avatars in real-time.

The usage of EEG, EMG, and EOG signals as bases for virtual avatar control has only been explored over the past few years, with various research exploring their viability in a variety of applications. The equipment required and the whole BCI system setup have also been the subject of extensive research in recent years, with each successive development looking to improve on their overall power and flexibility.

In conclusion, the adoption of this technology in life science research and applications may still be in its infancy, but the promise that each iteration brings does open up new and exciting ways to complement or possibly replace our current approaches in the field of medical technology.

The application for the patent, Biosignal-Based Avatar Control System and Method, was filed on November 27, 2019 to the U.S. Patent Trademark Office with application number 20210055794 16/698380. The inventors of the pending patent are Song Joo Lee, Hyung Min Kim, Laehyun Kim, and Keun Tae Kim. The patent application is assigned to Korea Institute of Science and Technology.